Tcp Receive Window Size Auto-tuning

Apr 14, 2020 The Receive Window Auto-Tuning feature lets the operating system continually monitor routing conditions such as bandwidth, network delay, and application delay. Therefore, the operating system can configure connections by scaling the TCP receive window to. TCP Receive Window Scaling. The ability to increase the receive window would be meaningless without window scaling. On its own, TCP allows a window size of only 64 KB. Operating systems back through Windows XP use this as their default value on fast links. The window scaling option is a way for window sizes to scale to megabytes and beyond.

Jan 11, 2018 The TCP window scale option is used to increase the maximum window size from 65,535 bytes to 1 Gigabyte. Best free vst plugins. Scaling up to larger TCP congestion.

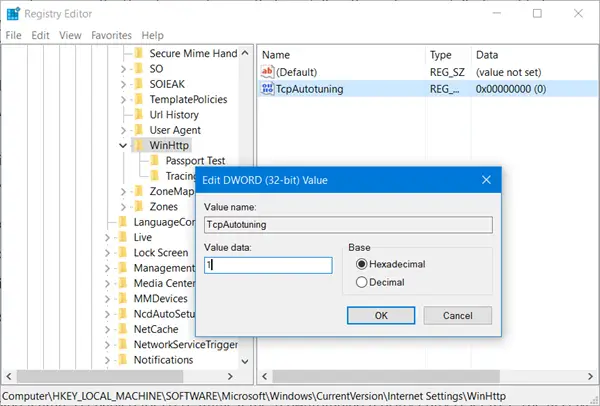

May 05, 2016 netsh interface tcp set global autotuninglevel=highlyrestricted TCP Auto-tuning slow network performance. For the most part, I have seen the TCP Auto-tuning slow network performance issue come into play with new server OS’es and client OS’es talking to legacy server OS’es like Windows. Windows (Vista/7/8/etc) will automatically set - and more importantly, increase - the size of the TCP receive window for you, as needed, to maximize throughput. Receive Window Auto-Tuning: Microsoft calls this automatic management of the receive window size 'auto-tuning'. The Receive Window Auto-Tuning feature is enabled for HTTP traffic if the TcpAutotuning registry entry is set to 1. The Receive Window Auto-Tuning feature is not enabled for HTTP traffic if the TcpAutotuning registry entry does not exist or if it is set to a value that is not 1. The issues mentioned under 'Large TCP Windows' are arguments in favor of 'buffer auto-tuning', a promising but relatively new approach to better TCP performance in operating systems. See the TCP auto-tuning zoo reference for a description of some approaches. Microsoft introduced (receive-side) buffer auto-tuning in Windows Vista.

The TCP window scale option is an option to increase the receive window size allowed in Transmission Control Protocol above its former maximum value of 65,535 bytes. This TCP option, along with several others, is defined in IETF RFC 1323 which deals with long fat networks (LFNs).

TCP windows[edit]

The throughput of a communication is limited by two windows: the congestion window and the receive window. The congestion window tries not to exceed the capacity of the network (congestion control); the receive window tries not to exceed the capacity of the receiver to process data (flow control). The receiver may be overwhelmed by data if for example it is very busy (such as a Web server). Each TCP segment contains the current value of the receive window. If, for example, a sender receives an ack which acknowledges byte 4000 and specifies a receive window of 10000 (bytes), the sender will not send packets after byte 14000, even if the congestion window allows it.

Theory[edit]

Tcp Windows Auto Tuning

TCP window scale option is needed for efficient transfer of data when the bandwidth-delay product (BDP) is greater than 64K. For instance, if a T1 transmission line of 1.5 Mbit/second was used over a satellite link with a 513 millisecond round trip time (RTT), the bandwidth-delay product is bits or about 96,187 bytes. Using a maximum buffer size of 64 KiB only allows the buffer to be filled to (65,535 / 96,187) = 68% of the theoretical maximum speed of 1.5 Mbits/second, or 1.02 Mbit/s.

By using the window scale option, the receive window size may be increased up to a maximum value of 1,073,725,440 ((2^16-1)*(2^14) or 65,535 x 16,384)) bytes. This is done by specifying a two byte shift count in the header options field. The true receive window size is left shifted by the value in shift count. A maximum value of 14 may be used for the shift count value. This would allow a single TCP connection to transfer data over the example satellite link at 1.5 Mbit/second utilizing all of the available bandwidth.

Essentially, not more than one full transmission window can be transferred within one round-trip time period. The window scale option enables a single TCP connection to fully utilize an LFN with a BDP of up to 1 GB, e.g. a 10 Gbit/s link with round-trip time of 800 ms.

Possible side effects[edit]

Because some firewalls do not properly implement TCP Window Scaling, it can cause a user's Internet connection to malfunction intermittently for a few minutes, then appear to start working again for no reason. There is also an issue if a firewall doesn't support the TCP extensions.[1]

Configuration of operating systems[edit]

Windows[edit]

TCP Window Scaling is implemented in Windows since Windows 2000.[2][3] It is enabled by default in Windows Vista / Server 2008 and newer, but can be turned off manually if required.[4]Windows Vista and Windows 7 have a fixed default TCP receive buffer of 64 kB, scaling up to 16 MB through 'autotuning', limiting manual TCP tuning over long fat networks.[5]

Linux[edit]

Linux kernels (from 2.6.8, August 2004) have enabled TCP Window Scaling by default. The configuration parameters are found in the /proc filesystem, see pseudo-file /proc/sys/net/ipv4/tcp_window_scaling and its companions /proc/sys/net/ipv4/tcp_rmem and /proc/sys/net/ipv4/tcp_wmem (more information: man tcp, section sysctl).[6]

Scaling can be turned off by issuing the command sysctl -w 'net.ipv4.tcp_window_scaling=0' as root.To maintain the changes after a restart, include the line 'net.ipv4.tcp_window_scaling=0' in /etc/sysctl.conf (or /etc/sysctl.d/99-sysctl.conf as of systemd 207).

FreeBSD, OpenBSD, NetBSD and Mac OS X[edit]

Default setting for FreeBSD, OpenBSD, NetBSD and Mac OS X is to have window scaling (and other features related to RFC 1323) enabled.

To verify their status, a user can check the value of the 'net.inet.tcp.rfc1323' variable via the sysctl command:

A value of 1 (output 'net.inet.tcp.rfc1323=1') means scaling is enabled, 0 means 'disabled'. If enabled it can be turned off by issuing the command:

This setting is lost across a system restart. To ensure that it is set at boot time, add the following line to /etc/sysctl.conf:net.inet.tcp.rfc1323=0

Sources[edit]

- ^'Network connectivity may fail when you try to use Windows Vista behind a firewall device'. Support.microsoft.com. Retrieved July 11, 2019.

- ^'Description of Windows 2000 and Windows Server 2003 TCP Features'. Support.microsoft.com. Retrieved July 11, 2019.

- ^'TCP Receive Window Size and Window Scaling'. Archived from the original on January 1, 2008.

- ^'Network connectivity fails when you try to use Windows Vista behind a firewall device'. Microsoft. July 8, 2009.

- ^'MS Windows'. Fasterdata.es.net. Retrieved July 11, 2019.

- ^'/proc/sys/net/ipv4/* Variables'.

Applies To: Windows Server 2012

This topic contains the following sections.

Determining the correct tuning settings for your network adapter depend on the following variables:

The network adapter and its feature set

The type of workload performed by the server

The server hardware and software resources

Your performance goals for the server

If your network adapter provides tuning options, you can optimize network throughput and resource usage to achieve optimum throughput based on the parameters described above.

The following sections describe some of your performance tuning options.

Windows Tcp Tuning

Enabling Offload Features

Turning on network adapter offload features is usually beneficial. Sometimes, however, the network adapter is not powerful enough to handle the offload capabilities with high throughput. For example, enabling segmentation offload can reduce the maximum sustainable throughput on some network adapters because of limited hardware resources. However, if the reduced throughput is not expected to be a limitation, you should enable offload capabilities, even for this type of network adapter.

Note

Some network adapters require offload features to be independently enabled for send and receive paths.

Enabling Receive Side Scaling (RSS) for Web Servers

RSS can improve web scalability and performance when there are fewer network adapters than logical processors on the server. When all the web traffic is going through the RSS-capable network adapters, incoming web requests from different connections can be simultaneously processed across different CPUs.

It is important to note that due to the logic in RSS and Hypertext Transfer Protocol (HTTP) for load distribution, performance might be severely degraded if a non-RSS-capable network adapter accepts web traffic on a server that has one or more RSS-capable network adapters. In this circumstance, you should use RSS-capable network adapters or disable RSS on the network adapter properties Advanced Properties tab. To determine whether a network adapter is RSS-capable, you can view the RSS information on the network adapter properties Advanced Properties tab.

RSS Profiles and RSS Queues

RSS predefined profiles are new in Windows Server 2012.

The default profile is NUMA Static, which changes the default behavior from previous versions of the operating system. To get started with RSS Profiles, you can review the available profiles to understand when they are beneficial and how they apply to your network environment and hardware.

For example, if you open Task Manager and review the logical processors on your server, and they seem to be underutilized for receive traffic, you can try increasing the number of RSS queues from the default of 2 to the maximum that is supported by your network adapter. Your network adapter might have options to change the number of RSS queues as part of the driver.

Increasing Network Adapter Resources

For network adapters that allow manual configuration of resources, such as receive and send buffers, you should increase the allocated resources. Some network adapters set their receive buffers low to conserve allocated memory from the host. The low value results in dropped packets and decreased performance. Therefore, for receive-intensive scenarios, we recommend that you increase the receive buffer value to the maximum.

Note

If a network adapter does not expose manual resource configuration, it either dynamically configures the resources, or the resources are set to a fixed value that cannot be changed.

Enabling Interrupt Moderation

To control interrupt moderation, some network adapters expose different interrupt moderation levels, buffer coalescing parameters (sometimes separately for send and receive buffers), or both.

You should consider interrupt moderation for CPU-bound workloads, and consider the trade-off between the host CPU savings and latency versus the increased host CPU savings because of more interrupts and less latency. If the network adapter does not perform interrupt moderation, but it does expose buffer coalescing, increasing the number of coalesced buffers allows more buffers per send or receive, which improves performance.

Performance Tuning for Low Latency Packet Processing

Many network adapters provide options to optimize operating system-induced latency. Latency is the elapsed time between the network driver processing an incoming packet and the network driver sending the packet back. This time is usually measured in microseconds. For comparison, the transmission time for packet transmissions over long distances is usually measured in milliseconds (an order of magnitude larger). This tuning will not reduce the time a packet spends in transit.

Following are some performance tuning suggestions for microsecond-sensitive networks.

Set the computer BIOS to High Performance, with C-states disabled. However, note that this is system and BIOS dependent, and some systems will provide higher performance if the operating system controls power management. You can check and adjust your power management settings from Control Panel or by using the powercfg command. For more information, see Powercfg Command-Line Options

Set the operating system power management profile to High Performance System. Note that this will not work properly if the system BIOS has been set to disable operating system control of power management.

Enable Static Offloads, for example, UDP Checksums, TCP Checksums, and Send Large Offload (LSO).

Enable RSS if the traffic is multi-streamed, such as high-volume multicast receive.

Disable the Interrupt Moderation setting for network card drivers that require the lowest possible latency. Remember, this can use more CPU time and it represents a tradeoff.

Handle network adapter interrupts and DPCs on a core processor that shares CPU cache with the core that is being used by the program (user thread) that is handling the packet. CPU affinity tuning can be used to direct a process to certain logical processors in conjunction with RSS configuration to accomplish this. Using the same core for the interrupt, DPC, and user mode thread exhibits worse performance as load increases because the ISR, DPC, and thread contend for the use of the core.

System Management Interrupts

Many hardware systems use System Management Interrupts (SMI) for a variety of maintenance functions, including reporting of error correction code (ECC) memory errors, legacy USB compatibility, fan control, and BIOS controlled power management. The SMI is the highest priority interrupt on the system and places the CPU in a management mode, which preempts all other activity while it runs an interrupt service routine, typically contained in BIOS.

Unfortunately, this can result in latency spikes of 100 microseconds or more. If you need to achieve the lowest latency, you should request a BIOS version from your hardware provider that reduces SMIs to the lowest degree possible. These are frequently referred to as “low latency BIOS” or “SMI free BIOS.” In some cases, it is not possible for a hardware platform to eliminate SMI activity altogether because it is used to control essential functions (for example, cooling fans).

Note

The operating system can exert no control over SMIs because the logical processor is running in a special maintenance mode, which prevents operating system intervention.

Performance Tuning TCP

You can performance tune TCP using the following items.

Details are provided in the following sections.

TCP Receive Window Auto-Tuning

Prior to Windows Server 2008, the network stack used a fixed-size receive-side window that limited the overall potential throughput for connections. One of the most significant changes to the TCP stack is TCP receive window auto-tuning. You can calculate the total throughput of a single connection when you use this fixed size default as:

Total achievable throughput in bytes = TCP window * (1 / connection latency)

For example, the total achievable throughput is only 51 Mbps on a 1 GB connection with 10 ms latency – which is a reasonable value for a large corporate network infrastructure.

With auto-tuning, however, the receive-side window is adjustable, and it can grow to meet the demands of the sender. It is entirely possible for a connection to achieve the full line rate of a 1 GB connection. Network usage scenarios that might have been limited in the past by the total achievable throughput of TCP connections can now fully use the network.

Windows Filtering Platform

The Windows Filtering Platform (WFP) that was introduced in Windows Vista and Windows Server 2008 provides APIs to non-Microsoft independent software vendors (ISVs) to create packet processing filters. Examples include firewall and antivirus software.

Note

A poorly written WFP filter can significantly decrease a server’s networking performance.

For more information, see Windows Filtering Platform in the Windows Dev Center.

TCP Parameters

The following registry keywords from Windows Server 2003 are no longer supported, and they are ignored in Windows Server 2012, Windows Server 2008 R2, and Windows Server 2008.

TcpWindowSize

NumTcbTablePartitions

MaxHashTableSize

Nobody Beats zZounds‘ Fast & Free ShippingAt zZounds, we know you want your gear fast, and shipped to you free of charge. Call us at 866-zZounds (866-996-8637), and we‘ll provide you with a lower price on the phone. Want our best price even faster? Also, if you purchase from us and later find the product for less elsewhere, call or within 45 days, and we‘ll refund you the difference.3. Pioneer djm t1 driver.